CFS Internals

The Linux Scheduler

We first describe how Linux’s Completely Fair Scheduling (CFS) algorithm works on a single-core single-user system (Section 2.1). From this perspective, the algorithm is quite simple. Then, in (Section 2.2) we explain how limitations of modern multicore systems force developers to work-around potential performance bottlenecks, which results in a sub- stantially more complex and bug-prone implementation.

Summary

weight

A thread’s weight is essentially its priority, or niceness in UNIX parlance.

timeslice

The scheduler defines a fixed time interval during which each thread in the system must run at least once. The interval is divided among threads proportionally to their weights.

vruntime

runtime of the thread divided by its weight. Once a thread’s vruntime exceeds its assigned timeslice, the thread is pre-empted from the CPU if there are other runnable threads available. A thread might also get pre-empted if another thread with a smaller vruntime is awoken.*

runqueue

red-black tree of RUNNABLE thread, in increasing order of vruntime. When a CPU looks for a new thread to run it picks the leftmost node in the red-black tree, which contains the thread with the smallest vruntime.

per-core runqueue

The motivation for per-core runqueues is that upon a context switch the core would access only its local runqueue, when it looks for a thread to run. Context switches are on a critical path, so they must be fast. Accessing only a core-local queue prevents the scheduler from making potentially expensive synchronized accesses, which would be required if it accessed a globally shared runqueue.

load balancing

In order for the scheduling algorithm to still work correctly and efficiently in the presence of per-core runqueues, the runqueues must be kept balanced.

load balancing scenarios 1

one queue has some number of high-priority threads and another queue has the same number of low priority threads. Then high-priority threads would get the same amount of CPU time as low-priority threads. That is not what we want. One idea, then, is to balance the queues based on threads’ weights, not their number.

load balancing scenarios 2

Consider a scenario with ten threads in two runqueues: one thread is of high priority and nine threads are of low priority . Let us assume that the weight of the high-priority thread is nine times higher than those of the low-priority threads. With the load balanced according to threads’ weights, one runqueue would contain the high-priority thread, while the other would contain the nine low-priority threads. The high-priority thread would get nine times more CPU than the low-priority threads, which appears to be what we want. However, suppose that the high- priority thread often sleeps for short periods of time, so the first core often goes idle. This core would have to frequently steal work from the other core’s runqueue to keep itself busy. However, we do not want work stealing to become the common case, because this defeats the purpose of per-core runqueues. What we really want is to balance the runqueues in a smarter way, accounting for the fact that the high priority thread does not need a whole core.

load

To achieve this goal, CFS balances runqueues based on a metric called load, which is the combination of the thread’s weight and its average CPU utilization.* If a thread does not use much of a CPU, its load will be decreased accordingly.

cache locality or NUMA

A basic load balancing algorithm would compare the load of all cores and then transfer tasks from the most loaded core to the least loaded core. Unfortunately this would result in threads being migrated across the machine without considering cache locality or NUMA. Instead, the load balancer uses a hierarchical strategy.

scheduling domain

The cores are logically organized in a hierarchy, at the bottom of which is a single core. How the cores are grouped in the next levels of the hierarchy depends on how they share the machine’s physical resources.

- sharing functional units (e.g., FPU),

- sharing a last-level cache (LLC)

- Different NUMA nodes's varying connectivity

Each level of the hierarchy is called a scheduling domain.

group scheduling

autogroup feature

On a single-CPU system, CFS is very simple

Linux’s CFS is an implementation of the weighted fair queueing (WFQ) scheduling algorithm, wherein the avail- able CPU cycles are divided among threads in proportion to their weights. To support this abstraction, CFS (like most other CPU schedulers) time-slices the CPU among the run- ning threads. The key decisions made in the scheduler are: how to determine a thread’s timeslice? and how to pick the next thread to run?

The scheduler defines a fixed time interval during which each thread in the system must run at least once. The interval is divided among threads proportionally to their weights. The resulting interval (after division) is what we call the timeslice. A thread’s weight is essentially its priority, or niceness in UNIX parlance. Threads with lower niceness have higher weights and vice versa.

When a thread runs, it accumulates vruntime (runtime of the thread divided by its weight). Once a thread’s vruntime exceeds its assigned timeslice, the thread is pre-empted from the CPU if there are other runnable threads available. A thread might also get pre-empted if another thread with a smaller vruntime is awoken.

Threads are organized in a runqueue, implemented as a red-black tree, in which the threads are sorted in the increasing order of their vruntime. When a CPU looks for a new thread to run it picks the leftmost node in the red-black tree, which contains the thread with the smallest vruntime.

On multi-core systems, CFS becomes quite complex

In multicore environments the implementation of the sched- uler becomes substantially more complex. Scalability con- cerns dictate using per-core runqueues.

The motivation for per-core runqueues is that upon a context switch the core would access only its local runqueue, when it looks for a thread to run. Context switches are on a critical path, so they must be fast. Accessing only a core-local queue prevents the scheduler from making potentially expensive synchronized accesses, which would be required if it accessed a globally shared runqueue.

However, in order for the scheduling algorithm to still work correctly and efficiently in the presence of per-core runqueues, the runqueues must be kept balanced.

Consider a dual-core system with two runqueues that are not balanced. Suppose that one queue has one low-priority thread and another has ten high-priority threads. If each core looked for work only in its local runqueue, then high-priority threads would get a lot less CPU time than the low-priority thread, which is not what we want. We could have each core check not only its runqueue but also the queues of other cores, but this would defeat the purpose of per-core runqueues.

Therefore, what Linux and most other schedulers do is pe- riodically run a load-balancing algorithm that will keep the queues roughly balanced.

Conceptually, load balancing is simple. In 2001, CPUs were mostly single-core and commodity server systems typ- ically had only a handful of processors. It was, therefore, difficult to foresee that on modern multicore systems load balancing would become challenging. Load balancing is an expensive procedure on today’s systems, both computation- wise, because it requires iterating over dozens of runqueues, and communication-wise, because it involves modifying re- motely cached data structures, causing extremely expensive cache misses and synchronization. As a result, the scheduler goes to great lengths to avoid executing the load-balancing procedure often. At the same time, not executing it often enough may leave runqueues unbalanced. When that hap- pens, cores might become idle when there is work to do, which hurts performance. So in addition to periodic load- balancing, the scheduler also invokes “emergency” load bal- ancing when a core becomes idle, and implements some load-balancing logic upon placement of newly created or newly awoken threads. These mechanisms should, in theory, ensure that the cores are kept busy if there is work to do.

The load balancing algorithm

Crucial for understanding the load balancing algorithm is the metric that the CFS scheduler uses to track load. We begin by explaining the metric and then describe the actual algorithm.

The load tracking metric

A strawman load-balancing algorithm would simply ensure that each runqueue has roughly the same number of threads. However, this is not necessarily what we want. Consider a scenario with two run- queues, where one queue has some number of high-priority threads and another queue has the same number of low- priority threads. Then high-priority threads would get the same amount of CPU time as low-priority threads. That is not what we want. One idea, then, is to balance the queues based on threads’ weights, not their number.

Unfortunately, balancing the load based solely on thread weights is not sufficient either. Consider a scenario with ten threads in two runqueues: one thread is of high priority and nine threads are of low priority. Let us assume that the weight of the high-priority thread is nine times higher than those of the low-priority threads. With the load balanced according to threads’ weights, one runqueue would contain the high-priority thread, while the other would contain the nine low-priority threads. The high-priority thread would get nine times more CPU than the low-priority threads, which appears to be what we want. However, suppose that the high- priority thread often sleeps for short periods of time, so the first core often goes idle. This core would have to frequently steal work from the other core’s runqueue to keep itself busy. However, we do not want work stealing to become the common case, because this defeats the purpose of per-core runqueues. What we really want is to balance the runqueues in a smarter way, accounting for the fact that the high priority thread does not need a whole core.

To achieve this goal, CFS balances runqueues not just based on weights, but based on a metric called load, which is the combination of the thread’s weight and its average CPU utilization. If a thread does not use much of a CPU, its load will be decreased accordingly.

Additionally, the load-tracking metric accounts for vary- ing levels of multithreading in different processes. Consider a scenario where we have one process with lots of threads, and another process with few threads. Then the threads of the first process combined will receive a lot more CPU time than the threads of the second process. As a result, the first pro- cess would use most of the CPU cycles and starve the other process. This would be unfair. So as of version 2.6.38 Linux added a group scheduling feature to bring fairness between groups of threads (cgroup feature). When a thread belongs to a cgroup, its load is further divided by the total number of threads in its cgroup. This feature was later extended to automatically assign processes that belong to different ttys to different cgroups (autogroup feature).

The load-balancing algorithm

A basic load balancing algorithm would compare the load of all cores and then transfer tasks from the most loaded core to the least loaded core. Unfortunately this would result in threads being mi- grated across the machine without considering cache local- ity or NUMA. Instead, the load balancer uses a hierarchical strategy.

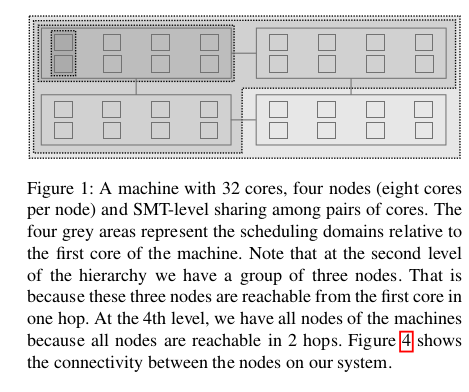

The cores are logically organized in a hierarchy, at the

bottom of which is a single core. How the cores are grouped

in the next levels of the hierarchy depends on how they

share the machine’s physical resources. In the example pro-

vided here we describe the hierarchy on our experimental

machine (see Table 5), where pairs of cores share functional

units (e.g., FPU), and groups of eight cores share a last-level cache (LLC). A group of eight cores sharing an LLC form a

NUMA node. Different NUMA nodes have varying connec-

tivity as explained below and as shown in Figure 4. Conse-

quently, on our target system, at the second level of the hier-

archy we have pairs of cores, and at the next level we have

groups of eight cores, each sharing an LLC (e.g., a NUMA

node). NUMA nodes are further grouped according to their

level of connectivity [23]. Nodes that are one hop apart from

each other will be at the next level, and so on. An example

of such a hierarchy is shown in Figure 1. Each level of the

hierarchy is called a scheduling domain.

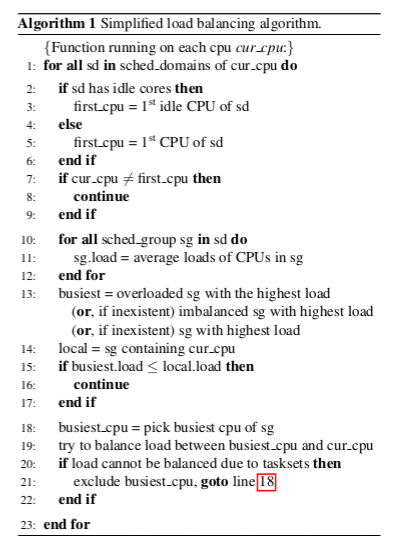

The load balancing algorithm is summarized in Algo-

rithm 1. Load balancing is run for each scheduling domain,

starting from the bottom to the top. At each level, one core

of each domain is responsible for balancing the load. This

core is either the first idle core of the scheduling domain

if the domain has idle cores whose free CPU cycles can be

used for load balancing, or the first core of the scheduling

domain otherwise (Lines 2–9). Following this, the average

load is computed for each scheduling group of the schedul-

ing domain (Line 10), and the busiest group is picked, based

on heuristics that favor overloaded and imbalanced groups

(Line 10). If the busiest group’s load is lower than the lo-

cal group’s load, the load is considered balanced at this level

(Line 16). Otherwise, the load is balanced between the lo-

cal CPU and the busiest CPU of the group, with a tweak

to ensure that load balancing works even in the presence of

tasksets (Lines 18–23).

Assume, for the time being, that this algorithm is run by all cores in every load-balancing period; in the next section we will explain that, as an optimization, not all cores actually do.

A core executing the algorithm begins at the second-to-lowest level of the hierarchy and balances the load one level below. For example, on the system in Figure 1 the core will begin at the pair-of-cores level and will balance the load between the two individual cores contained therein. Then, it will proceed to the level of the NUMA node, balancing the load one level below (among pairs of cores, in this case), but not between individual cores of the NUMA node. In a scheduling domain, the sets of cores among which the load balancing is performed are called scheduling groups. At the NUMA node domain, there will be four scheduling groups, each corresponding to a pair of cores. The core will find the busiest scheduling group other than its own and will steal tasks from the busiest core in that group.

Optimizations

The scheduler prevents duplicating work by running the load-balancing algorithm only on the designated core for the given scheduling domain.

When each active core receives a periodic clock tick and begins running the load-balancing algorithm, it checks whether it is the lowest-numbered core in the domain (if all cores are busy), or if it is the lowest-numbered idle core (if any core is idle). This is shown in Line 2 of the Algorithm. If this condition holds, the core deems itself as designated and continues to run the algorithm.

Power-related optimizations may further reduce the fre- quency of load balancing on an idle core. Originally, idle cores were always awoken on every clock tick; at this point they would run the load-balancing algorithm. However, since version 2.6.21 Linux has the option (now enabled by default) to avoid periodically waking up sleeping cores: they enter a tickless idle state, in which they can reduce their energy use. The only way for a core in this state to get work when another core is overloaded is to be awoken by another core. To this end, on each scheduling tick, if a core deems it- self “overloaded”, it checks whether there have been tickless idle cores in the system for some time, and if so, it wakes up the first tickless idle core and assigns it the role of NOHZ balancer. The NOHZ balancer core is responsible, on each tick, to run the periodic load balancing routine for itself and on behalf of all tickless idle cores.

On top of periodic load balancing, the scheduler also balances load when waking up threads. When a thread wakes up, after sleeping or waiting for a resource (e.g., locks, I/O), the scheduler tries to place it on the idlest core. Special rules apply when the thread is awoken by another thread (waker thread). In that case the scheduler will favour cores sharing a cache with the waker thread to improve cache reuse.